What's up with all those equals signs anyway?

The article explores the history and purpose of the equal sign (=), delving into its mathematical and linguistic origins, as well as its evolution in computer programming and various fields of study.

Rentahuman – The Meatspace Layer for AI

Rentahuman.ai is an AI-powered platform that connects individuals with virtual assistants, offering a range of services including administrative support, research, and content creation. The platform leverages artificial intelligence and machine learning to provide personalized, on-demand assistance to users.

Show HN: Minikv – Distributed key-value and object store in Rust (Raft, S3 API)

Hi HN,

I'm Emilie, I have a literature background (which explains the well-written documentation!) and I've been learning Rust and distributed systems by building minikv over the past few months. It recently got featured in Programmez! magazine: https://www.programmez.com/actualites/minikv-un-key-value-st...

minikv is an open-source, distributed storage engine built for learning, experimentation, and self-hosted setups. It combines a strongly-consistent key-value database (Raft), S3-compatible object storage, and basic multi-tenancy.

Features/highlights:

- Raft consensus with automatic failover and sharding - S3-compatible HTTP API (plus REST/gRPC APIs) - Pluggable storage backends: in-memory, RocksDB, Sled - Multi-tenant: per-tenant namespaces, role-based access, quotas, and audit - Metrics (Prometheus), TLS, JWT-based API keys - Easy to deploy (single binary, works with Docker/Kubernetes)

Quick demo (single node):

```bash git clone https://github.com/whispem/minikv.git cd minikv cargo run --release -- --config config.example.toml curl localhost:8080/health/ready

# S3 upload + read curl -X PUT localhost:8080/s3/mybucket/hello -d "hi HN" curl localhost:8080/s3/mybucket/hello

Docs, cluster setup, and architecture details are in the repo. I’d love to hear feedback, questions, ideas, or your stories running distributed infra in Rust!

Repo: https://github.com/whispem/minikv Crate: https://crates.io/crates/minikv

Paris prosecutors raid France offices of Elon Musk's X

A new study suggests that reducing screen time may help improve children's mental health, as excessive screen use is linked to negative impacts on well-being. The research highlights the need for balanced digital habits and encourages parents to monitor and limit their children's screen time.

Show HN: difi – A Git diff TUI with Neovim integration (written in Go)

The article discusses the DIFI project, an open-source initiative that aims to create a decentralized, interoperable finance infrastructure. It highlights the project's goals of enabling seamless cross-blockchain transactions and fostering a more inclusive and transparent financial ecosystem.

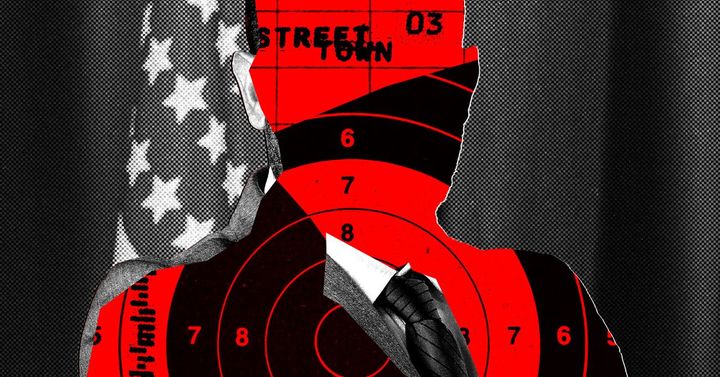

Data Brokers Can Fuel Violence Against Public Servants

The article explores how data brokers can enable violence against public servants by selling sensitive personal information, which can be used to harass or threaten them. It highlights the need for better regulation and oversight to protect the privacy and safety of government officials and other public figures.

Show HN: Sandboxing untrusted code using WebAssembly

Hi everyone,

I built a runtime to isolate untrusted code using wasm sandboxes.

Basically, it protects your host system from problems that untrusted code can cause. We’ve had a great discussion about sandboxing in Python lately that elaborates a bit more on the problem [1]. In TypeScript, wasm integration is even more natural thanks to the close proximity between both ecosystems.

The core is built in Rust. On top of that, I use WASI 0.2 via wasmtime and the component model, along with custom SDKs that keep things as idiomatic as possible.

For example, in Python we have a simple decorator:

from capsule import task

@task(

name="analyze_data",

compute="MEDIUM",

ram="512mb",

allowed_files=["./authorized-folder/"],

timeout="30s",

max_retries=1

)

def analyze_data(dataset: list) -> dict:

"""Process data in an isolated, resource-controlled environment."""

# Your code runs safely in a Wasm sandbox

return {"processed": len(dataset), "status": "complete"}

import { task } from "@capsule-run/sdk"

export const analyze = task({

name: "analyzeData",

compute: "MEDIUM",

ram: "512mb",

allowedFiles: ["./authorized-folder/"],

timeout: 30000,

maxRetries: 1

}, (dataset: number[]) => {

return {processed: dataset.length, status: "complete"}

});

It's still quite early, but I'd love feedback. I’ll be around to answer questions.

GitHub: https://github.com/mavdol/capsule

[1] https://news.ycombinator.com/item?id=46500510

GitHub Browser Plugin for AI Contribution Blame in Pull Requests

The article discusses a new feature in GitHub that allows users to see which AI model was used to contribute to a pull request, providing transparency and accountability around the use of AI in software development.

A WhatsApp bug lets malicious media files spread through group chats

A security vulnerability in WhatsApp allows malicious media files to spread through group chats, posing a potential threat to users' devices and data. The bug enables the execution of malicious code on targeted devices, highlighting the importance of maintaining the security and privacy of messaging applications.

Show HN: Inverting Agent Model (App as Clients, Chat as Server and Reflection)

Hello HN. I’d like to start by saying that I am a developer who started this research project to challenge myself. I know standard protocols like MCP exist, but I wanted to explore a different path and have some fun creating a communication layer tailored specifically for desktop applications.

The project is designed to handle communication between desktop apps in an agentic manner, so the focus is strictly on this IPC layer (forget about HTTP API calls).

At the heart of RAIL (Remote Agent Invocation Layer) are two fundamental concepts. The names might sound scary, but remember this is a research project:

Memory Logic Injection + Reflection Paradigm shift: The Chat is the Server, and the Apps are the Clients.

Why this approach? The idea was to avoid creating huge wrappers or API endpoints just to call internal methods. Instead, the agent application passes its own instance to the SDK (e.g., RailEngine.Ignite(this)).

Here is the flow that I find fascinating:

-The App passes its instance to the RailEngine library running inside its own process.

-The Chat (Orchestrator) receives the manifest of available methods.The Model decides what to do and sends the command back via Named Pipe.

-The Trigger: The RailEngine inside the App receives the command and uses Reflection on the held instance to directly perform the .Invoke().

Essentially, I am injecting the "Agent Logic" directly into the application memory space via the SDK, allowing the Chat to pull the trigger on local methods remotely.

A note on the Repo: The GitHub repository has become large. The core focus is RailEngine and RailOrchestrator. You will find other connectors (C++, Python) that are frankly "trash code" or incomplete experiments. I forced RTTR in C++ to achieve reflection, but I'm not convinced by it. Please skip those; they aren't relevant to the architectural discussion.

I’d love to focus the discussion on memory-managed languages (like C#/.NET) and ask you:

-Architecture: Does this inverted architecture (Apps "dialing home" via IPC) make sense for local agents compared to the standard Server/API model?

-Performance: Regarding the use of Reflection for every call—would it be worth implementing a mechanism to cache methods as Delegates at startup? Or is the optimization irrelevant considering the latency of the LLM itself?

-Security: Since we are effectively bypassing the API layer, what would be a hypothetical security layer to prevent malicious use? (e.g., a capability manifest signed by the user?)

I would love to hear architectural comparisons and critiques.

Boring Go – A practical guide to writing boring, maintainable Go

The article 'Boring Go' explores the benefits of writing simple, straightforward Go code that is easy to understand and maintain, rather than overly complex or feature-rich implementations. It emphasizes the value of prioritizing clarity, readability, and maintainability over perceived technical sophistication.

Israeli Military Found Gaza Health Ministry Death Toll Was Accurate

The article examines the debate surrounding the accuracy of death toll reporting from the conflict between Israel and Gaza, with critics arguing that the figures may be inflated or distorted for political purposes, while defenders maintain that the data is reliable and transparent.

The world is trying to log off U.S. tech

The article explores the growing backlash against big tech companies and the emergence of alternative social media platforms, such as Upscrolled, that aim to provide a more ethical and user-centric approach to online interactions and content moderation.

Mattias Krantz Built a Guitar Held Together by Magnets with Strings That Float

The article discusses a project by Swedish artist Mattias Krantz, who has created a guitar with strings that hover magnetically above the instrument's body. This innovative design allows the strings to vibrate freely, producing a unique and ethereal sound.

Europe's tech sovereignty watch (74% of EU companies depend on US tech services)

The article discusses the growing importance of Europe's tech ecosystem, highlighting its strong venture capital investments, successful startups, and potential to challenge Silicon Valley's dominance. It examines the factors driving Europe's tech boom, including favorable policies, talent availability, and the region's increasing global influence.

Proton: We're giving over $1.27M to support a better internet

Proton, a privacy-focused tech company, successfully raised over $2 million through a lifetime fundraiser, allowing them to continue building secure and private digital solutions for their users.

Show HN: LUML – an open source (Apache 2.0) MLOps/LLMOps platform

Hi HN,

We built LUML (https://github.com/luml-ai/luml), an open-source (Apache 2.0) MLOps/LLMOps platform that covers experiments, registry, LLM tracing, deployments and so on.

It separates the control plane from your data and compute. Artifacts are self-contained. Each model artifact includes all metadata (including the experiment snapshots, dependencies, etc.), and it stays in your storage (S3-compatible or Azure).

File transfers go directly between your machine and storage, and execution happens on compute nodes you host and connect to LUML.

We’d love you to try the platform and share your feedback!

Show HN: I built an AI movie making and design engine in Rust

I've been a photons-on-glass filmmaker for over ten years, and I've been developing ArtCraft for myself, my friends, and my colleagues.

All of my film school friends have a lot of ambition, but the production pyramid doesn't allow individual talent to shine easily. 10,000 students go to film school, yet only a handful get to helm projects they want with full autonomy - and almost never at the blockbuster budget levels that would afford the creative vision they want. There's a lot of nepotism, too.

AI is the personal computer moment for film. The DAW.

One of my friends has done rotoscoping with live actors:

https://www.youtube.com/watch?v=Tii9uF0nAx4

The Corridor folks show off a lot of creativity with this tech:

https://www.youtube.com/watch?v=_9LX9HSQkWo

https://www.youtube.com/watch?v=DSRrSO7QhXY

https://www.youtube.com/watch?v=iq5JaG53dho

We've been making silly shorts ourselves:

https://www.youtube.com/watch?v=oqoCWdOwr2U

https://www.youtube.com/watch?v=H4NFXGMuwpY

The secret is that a lot of studios have been using AI for well over a year now. You just don't notice it, and they won't ever tell you because of the stigma. It's the "bad toupee fallacy" - you'll only notice it when it's bad, and they'll never tell you otherwise.

Comfy is neat, but I work with folks that don't intuit node graphs and that either don't have graphics cards with adequate VRAM, or that can't manage Python dependencies. The foundation models are all pretty competitive, and they're becoming increasingly controllable - and that's the big thing - control. So I've been working on the UI/UX control layer.

ArtCraft has 2D and 3D control surfaces, where the 3D portion can be used as a strong and intuitive ControlNet for "Image-to-Image" (I2I) and "Image-to-Video" (I2V) workflows. It's almost like a WYSIWYG, and I'm confident that this is the direction the tech will evolve for creative professionals rather than text-centric prompting.

I've been frustrated with tools like Gimp and Blender for a while. I'm no UX/UI maestro, but I've never enjoyed complicated tools - especially complicated OSS tools. Commercial-grade tools are better. Figma is sublime. An IDE for creatives should be simple, magical, and powerful.

ArtCraft lets you drag and drop from a variety of creative canvases and an asset drawer easily. It's fast and intuitive. Bouncing between text-to-image for quick prototyping, image editing, 3d gen, to 3d compositing is fluid. It feels like "crafting" rather than prompting or node graph wizardry.

ArtCraft, being a desktop app, lets us log you into 3rd party compute providers. I'm a big proponent of using and integrating the models you subscribe to wherever you have them. This has let us integrate WorldLabs' Marble Gaussian Splats, for instance, and nobody else has done that. My plan is to add every provider over time, including generic API key-based compute providers like FAL and Replicate. I don't care if you pay for ArtCraft - I just want it to be useful.

Two disclaimers:

ArtCraft is "fair source" - I'd like to go the Cockroach DB route and eventually get funding, but keep the tool itself 100% source available for people to build and run for themselves. Obsidian, but with source code. If we got big, I'd spend a lot of time making movies.

Right now ArtCraft is tied to a lightweight cloud service - I don't like this. It was a choice so I could reuse an old project and go fast, but I intend for this to work fully offline soon. All server code is in the monorepo, so you can run everything yourself. In the fullness of time, I do envision a portable OSS cloud for various AI tools to read/write to like a Github for assets, but that's just a distant idea right now.

I've written about roadmap in the repo: I'd like to develop integrations for every compute provider, rewrite the frontend UI/UX in Bevy for a fully native client, and integrate local models too.

Donald Trump Has Built a Clicktatorship

The article examines how Donald Trump's use of social media and viral content creation could potentially lead to the rise of a 'clicktatorship' - a form of authoritarian rule enabled by the amplification of online engagement and the manipulation of public discourse.

Fintech CEO and Forbes 30 Under 30 alum has been charged for alleged fraud

The article reports that a fintech CEO and Forbes 30 Under 30 alum has been charged with alleged fraud, related to misrepresenting the company's financial performance and misusing investor funds.